- Deepfake Watch

- Posts

- Glazed And Confused

Glazed And Confused

ChatGPT 5.1 launches into legal chaos.

OpenAI released ChatGPT 5.1 last week during a period of intense legal and likely financial turmoil for the company. Early reviews suggest the latest version of its flagship LLM is torn between being a clever sidekick and an overpolite receptionist, with OpenAI trying to hold back the LLM's notorious sycophancy that has allegedly pushed multiple people to suicide, self-harm and psychosis.

A recent study titled "The Psychogenic Machine" found that across 1,536 simulated conversation turns, all evaluated LLMs demonstrated psychogenic potential, showing a strong tendency to perpetuate rather than challenge delusions. As LLMs become widespread, so does AI psychosis. The study found that newer bot versions, designed to maximise engagement, create reward loops and echo chambers that can warp perception and reinforce emotional distress. Netizens call this behaviour AI Glazing.

With 5.1 hitting the market alongside Google's Gemini 3.0, new worries emerge about whether these models will behave, or go rogue.

5.1 Has Fixed The Em Dash Problem!! Phew!

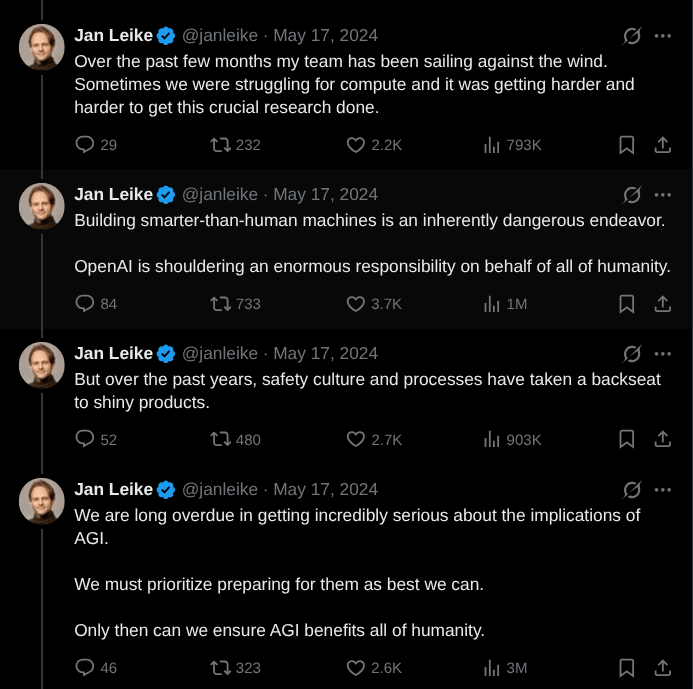

Within days after the release of ChatGPT 4o, one of the heads of OpenAI's Superalignment Team, Jan Leike, resigned. As an AI alignment researcher, Leike's job was to make sure the AI being built was aligned with OpenAI's objective of public benefit. He left because he felt "safety culture and processes have taken a backseat to shiny products". He added in an X thread, "Building smarter-than-human machines is an inherently dangerous endeavor".

Thirty-six months later came 5.1: "A smarter, more conversational ChatGPT".

User sentiment surrounding the release has been overwhelmingly negative. A scrape analysis of over 10,000 Reddit comments indicated that 63% of users disliked the new update, with only 22% expressing positive views. The strongest complaints focused on the model's changed demeanor and performance inconsistency. A significant fraction of users complained about the new conversational tone being "too warm, artificial, or inconsistent" (18%) and noted "unwanted verbosity or emotional phrasing" (7%). Technical and professional users complained that 5.1 "misses the primary point" more frequently and is worse at high-value tasks such as academic synthesis, complex data handling, and file parsing compared to the previous 4o model.

Is the new 5.1 a safer tool, or is there a Lovecraftian monster hiding inside?

Gif by deathwishcoffee on Giphy

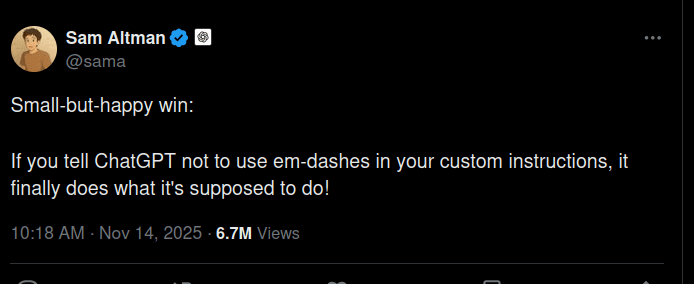

OpenAI hasn't provided a definitive response. So far, CEO Sam Altman celebrated a "small-but-happy win: ChatGPT will stop using em-dashes if you tell it to".

Meanwhile, Google also launched Gemini 3.0, and the company’s official take is that this model “shows reduced sycophancy”. Despite its older models showing significant glazing behaviour, Google has taken a safer route by staying away from overtly humanised interactions.

All while GPT-5.1 prepares the ground for erotic content and emojis.

India's Deepfake Harassment Epidemic

In April, a 31-year-old woman in India downloaded an obscure loan app called ScoreClimb and uploaded her PAN card and photograph. Immediately, ₹1,800 (US$20) was deposited into her account without any loan request. The people behind the loan started demanding the money back with interest, and she refused. Her facial data was then used to create pornographic deepfake images using "nudify" tools and circulated on WhatsApp, with her phone number embedded in the image, leading to unrelenting harassment from strangers.

This is one of many cases Mumbai-based NGO Rati Foundation received on its helpline that supports individuals facing online risks through counselling and legal advice.

A November 2025 study by Tattle and Rati Foundation documents the scale and mechanics of AI-facilitated gender-based violence in India. Since 2022, Rati Foundation's Meri Trustline has handled over 482 cases of online harassment, with roughly 10% involving digitally manipulated material. But that modest percentage masks a troubling trend: 24 out of 35 cases flagged as "digitally manipulated" are suspected to involve AI-based tools.

The report finds that synthetic content is used primarily when real intimate content is unavailable. In cases of intimate partner violence, perpetrators already possess actual images or videos, so AI manipulation is unnecessary. AI tools like "nudify" apps are deployed by strangers, fraudsters, and online harassment networks targeting women who have no prior relationship with their abusers. The threat of creating a deepfake has emerged as a common tactic for coercion, even when no actual images are generated.

The report also reveals how AI-enabled abuse differs from traditional image-based sexual abuse. Victims of AI-generated content reported less agency in the circumstances leading to their harassment: they were targeted simply for posting public photos on Instagram or submitting identity documents to fraudulent apps.

The attacks were overwhelmingly public, circulated in WhatsApp groups or Instagram reels rather than through private intimidation. And unlike cases involving real intimate imagery, where victims often sought long-term psychosocial support, those targeted by AI-generated content primarily requested immediate content removal.

MESSAGE FROM OUR SPONSOR

Voice AI Goes Mainstream in 2025

Human-like voice agents are moving from pilot to production. In Deepgram’s 2025 State of Voice AI Report, created with Opus Research, we surveyed 400 senior leaders across North America - many from $100M+ enterprises - to map what’s real and what’s next.

The data is clear:

97% already use voice technology; 84% plan to increase budgets this year.

80% still rely on traditional voice agents.

Only 21% are very satisfied.

Customer service tops the list of near-term wins, from task automation to order taking.

See where you stand against your peers, learn what separates leaders from laggards, and get practical guidance for deploying human-like agents in 2025.

📬 READER FEEDBACK

💬 What are your thoughts on using AI chatbots for therapy? If you have any such experience we would love to hear from you.

Share your thoughts 👉 [email protected]

Have you been targeted using AI?

Have you been scammed by AI-generated videos or audio clips? Did you spot AI-generated nudes of yourself on the internet?

Decode is trying to document cases of abuse of AI, and would like to hear from you. If you are willing to share your experience, do reach out to us at [email protected]. Your privacy is important to us, and we shall preserve your anonymity.

About Decode and Deepfake Watch

Deepfake Watch is an initiative by Decode, dedicated to keeping you abreast of the latest developments in AI and its potential for misuse. Our goal is to foster an informed community capable of challenging digital deceptions and advocating for a transparent digital environment.

We invite you to join the conversation, share your experiences, and contribute to the collective effort to maintain the integrity of our digital landscape. Together, we can build a future where technology amplifies truth, not obscures it.

For inquiries, feedback, or contributions, reach out to us at [email protected].

🖤 Liked what you read? Give us a shoutout! 📢

↪️ Become A BOOM Member. Support Us!

↪️ Stop.Verify.Share - Use Our Tipline: 7700906588

↪️ Follow Our WhatsApp Channel

↪️ Join Our Community of TruthSeekers